In the realm of Python app development, the choice between multithreading vs multiprocessing holds substantial significance.

These parallel programming techniques allow you to harness the latent power of modern hardware, enabling tasks to execute concurrently and enhancing user experience.

This comprehensive guide delves deep into the intricacies of multiprocessing vs multithreading in Python, dissecting their strengths, limitations, and optimal use cases.

In addition to this, it provides actionable insights to guide your decision-making process and empower you to optimize your development projects.

Therefore, let’s get right into it:

Understanding Parallelism

As a Python app developer, you’re constantly seeking ways to optimize your code for performance and responsiveness.

That’s where parallelism comes in.

Imagine a symphony where multiple instruments play different parts simultaneously, creating a harmonious result.

This is the essence of parallelism in computing.

Parallelism involves breaking down complex tasks into smaller subtasks and executing them concurrently, resulting in faster execution and improved efficiency.

Multiprocessing vs multithreading are the vehicles that allow you to do this in your Python applications.

Speaking of which, to better understand the entire multithreading vs multiprocessing scene, let’s discuss each of them below.

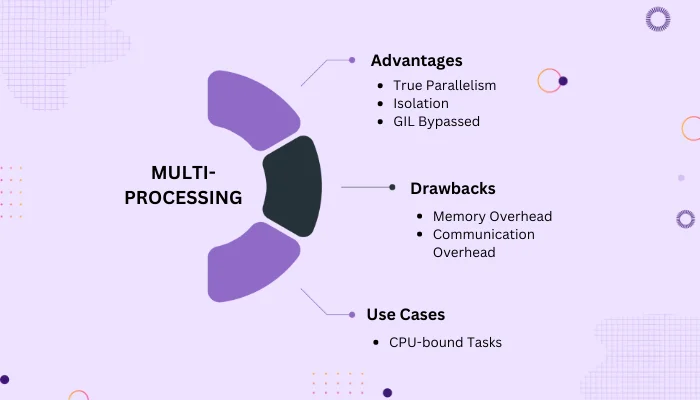

Multiprocessing

One of the first question people ask is, what is multiprocessing?

Well, when you opt for multiprocessing, you’re essentially creating separate processes, each with its memory space and Python interpreter.

This grants you the power of true parallel execution, as these processes can be allocated to different CPU cores, maximizing the use of your hardware resources.

But why would you do it?

Well, there are several good reasons, let’s look at few of them below:

Advantages of Multiprocessing

- True Parallelism: The ability to run processes independently results in true parallel execution, utilizing multiple CPU cores simultaneously.

- Isolation: Each process operates in its isolated memory space, ensuring stability and fault tolerance. A crash in one process doesn’t impact others.

- GIL Bypassed: Unlike multithreading, multiprocessing bypasses the Global Interpreter Lock (GIL), allowing for unhindered multi-core utilization.

Drawbacks of Multiprocessing

As there are advantages, there are also disadvantages:

- Memory Overhead: Since each process has its memory space, there’s a higher memory overhead compared to multithreading.

- Communication Overhead: Inter-process communication can be more complex and slower than inter-thread communication.

Use Cases for Multiprocessing

- CPU-bound tasks: Multiprocessing shines when tackling tasks that require significant computation, such as data-intensive operations, simulations, and complex mathematical calculations.

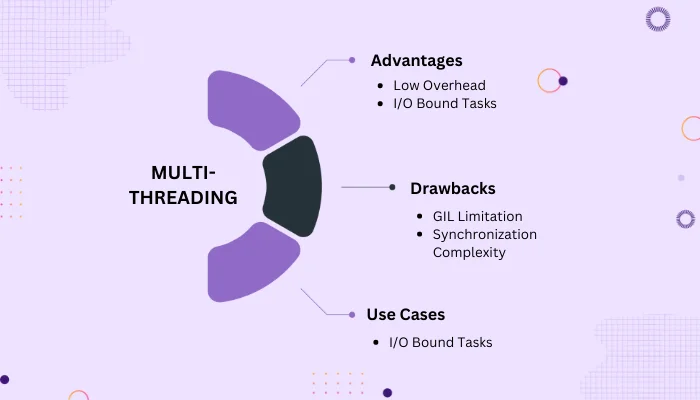

Multithreading

Now, it’s time to look at the other side of multithreading vs multiprocessing, let’s look at it:

In multithreading, you’re creating multiple threads within a single process, and these threads share the same memory space and Python interpreter.

Threads are lightweight and are particularly well-suited for tasks that involve frequent I/O operations.

Advantages of Multithreading

- Low Overhead: Threads are lighter on resources compared to processes, making them efficient for quick creation and management.

- I/O-bound tasks: Multithreading is your ally when dealing with tasks that spend a considerable amount of time waiting for external resources, such as file I/O, network requests, and user input.

Drawbacks of Multithreading

- GIL Limitation: Python’s GIL restricts true parallelism, making multithreading less effective for CPU-bound tasks where intensive computation is required.

- Synchronization Complexity: Managing shared resources and synchronizing threads can lead to complex and error-prone code, susceptible to deadlocks and race conditions.

Use Cases for Multithreading

- I/O-bound tasks: Applications that rely heavily on interacting with external resources, like web scraping, downloading files, and real-time monitoring, benefit from the responsiveness of multithreading.

Multiprocessing vs. Multithreading Comparison

| Aspect | Multiprocessing | Multithreading |

| Parallel Execution | True parallel execution with separate memory spaces | Parallel execution within the same memory space |

| CPU Utilization | Maximizes CPU utilization | Limited by the Global Interpreter Lock (GIL) |

| Isolation | Processes are isolated, enhancing fault tolerance | Threads share memory, potential for interference |

| Memory Overhead | Higher memory overhead due to separate memory spaces | Lower memory overhead due to shared memory space |

| Communication Overhead | Inter-process communication can be slower and complex | Inter-thread communication is faster and simpler |

| Suitability | Ideal for CPU-bound tasks | Suitable for I/O-bound tasks |

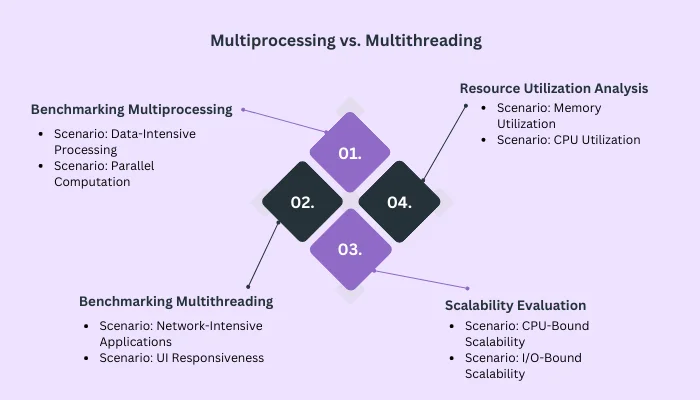

Benchmarking Multiprocessing

Scenario: Data-Intensive Processing

Suppose you’re working with a large dataset that requires extensive processing. Multiprocessing excels in this scenario.

By dividing the dataset into smaller chunks and processing them concurrently, you can significantly reduce processing time.

Each process operates independently, utilizing separate CPU cores to expedite computation.

Scenario: Parallel Computation

In scenarios where you need to perform complex mathematical calculations or simulations, multiprocessing proves its mettle.

Each process can handle a different calculation, achieving true parallelism and optimizing CPU utilization.

Benchmarking Multithreading

Scenario: Network-Intensive Applications

For applications heavily reliant on network communication, multithreading offers a compelling advantage.

Consider a web crawler tasked with extracting data from multiple websites.

By employing multithreading, each thread can manage a distinct website, allowing for simultaneous data retrieval and accelerating the application’s responsiveness.

Scenario: UI Responsiveness

User interface responsiveness is paramount in app development.

When dealing with user interactions and real-time updates, multithreading plays a pivotal role.

By delegating UI-related tasks to separate threads, you ensure that the interface remains responsive even when other threads are engaged in background tasks.

Scalability Evaluation

Scenario: CPU-Bound Scalability

As your application’s workload increases, ensuring optimal performance is vital. Multiprocessing shines in CPU-bound scenarios, where computational tasks dominate.

The ability to distribute tasks across multiple processes and CPU cores results in efficient scaling, allowing your application to handle heavier workloads without sacrificing responsiveness.

Scenario: I/O-Bound Scalability

When scalability is a concern for I/O-bound tasks, multithreading emerges as a compelling solution.

Applications that involve frequent interactions with external resources, such as database queries or network requests, benefit from multithreading’s ability to handle multiple I/O operations concurrently.

This ensures that the application remains responsive even as the user load grows.

Resource Utilization Analysis

Scenario: Memory Utilization

In resource-intensive scenarios where memory utilization is a concern, multithreading has an advantage.

Since threads share the same memory space within a process, the overhead associated with memory allocation and management is lower compared to multiprocessing, where each process has its memory space.

Scenario: CPU Utilization

For tasks that require extensive computation and can fully utilize multiple CPU cores, multiprocessing is the preferred choice.

By distributing tasks among separate processes, you can maximize CPU utilization and achieve true parallel execution.

Best Practices for Multiprocessing and multithreading

If you want to implement multithreading or/and multiprocessing in your GUI application or any other form of python application, here are some best practices that you should follow:

Utilize Thread Pools and Process Pools

When dealing with dynamic task allocation, thread pools and process pools prove invaluable.

These pools maintain a set of reusable workers, reducing the overhead of creating and destroying threads or processes frequently.

Graceful Shutdown and Exception Handling

Developing robust multithreaded or multiprocess applications requires a strong focus on error handling.

Implement mechanisms for graceful shutdowns to ensure proper termination of threads or processes.

Effective exception handling prevents crashes from propagating throughout your codebase, maintaining the stability of your application.

Properly Manage Shared Resources

In scenarios where threads or processes access shared resources, ensuring data consistency is crucial.

Proper synchronization mechanisms, such as locks, semaphores, and conditions, prevent race conditions and data corruption.

Implement Throttling and Rate Limiting

Efficient resource management is essential for maintaining stability and preventing resource exhaustion.

Implement throttling mechanisms to control the rate at which threads or processes access resources.

Rate limiting ensures that resource-intensive operations, such as network requests, are performed judiciously, preventing overwhelming external systems.

Use Thread-Safe and Process-Safe Data Structures

To mitigate conflicts arising from concurrent access, opt for thread-safe and process-safe data structures.

Python’s threading and multiprocessing libraries offer specialized data structures designed for concurrent access, ensuring data integrity and consistency.

Consider CPU Affinity

For multiprocessing scenarios, consider employing CPU affinity to bind processes to specific CPU cores.

This approach can mitigate cache thrashing and enhance overall performance by reducing context switching.

Profile and Monitor Your Code

First you need to develop a comprehensive understanding of your application’s performance by utilizing profiling and monitoring tools.

Profiling tools help identify performance bottlenecks and areas for optimization, while monitoring tools provide insights into resource utilization and potential issues during runtime.

Design for Scalability

As you architect your application, prioritize scalability to accommodate growing workloads.

Implement dynamic resource allocation strategies and load balancing mechanisms to ensure efficient utilization of resources as user demand increases.

Prioritize I/O Operations

For applications characterized by frequent interactions with external resources, such as databases, APIs, or file systems, multithreading shines.

Moreover, prioritize tasks involving I/O operations for multithreading to capitalize on its responsiveness and maintain a smooth user experience.

Leverage Asynchronous Programming

In scenarios dominated by I/O-bound tasks, consider leveraging asynchronous programming libraries such as `asyncio`.

Asynchronous programming allows you to manage multiple tasks concurrently within a single thread, optimizing resource utilization and enhancing responsiveness.

Practical Execution: Exploring IO-Bound and CPU-Bound Functions

Now, let’s delve into the practical execution of IO-bound and CPU-bound functions using both multiprocessing vs multithreading .

This hands-on approach will provide you with real-world insights into how these parallel programming techniques behave in different scenarios.

You’ll witness the impact of parallelism on performance and responsiveness firsthand.

IO-Bound Function: Simulating Idle Time

Imagine a scenario where you’re waiting for data from external sources, such as fetching information from various websites.

In this case, the CPU can sit idle while you’re waiting for the data to arrive. Let’s see how IO-bound tasks fare using both multiprocessing vs multithreading .

Using Multiprocessing for IO-Bound

“`python

import multiprocessing

import time

def io_bound_function():

print(“IO-bound function using multiprocessing started”)

time.sleep(3) # Simulating waiting for external data

print(“IO-bound function using multiprocessing finished”)

if __name__ == “__main__”:

process = multiprocessing.Process(target=io_bound_function)

process.start()

process.join()

print(“Main process continued”)

“`

Using Multithreading for IO-Bound

“`python

import threading

import time

def io_bound_function():

print(“IO-bound function using multithreading started”)

time.sleep(3) # Simulating waiting for external data

print(“IO-bound function using multithreading finished”)

if __name__ == “__main__”:

thread = threading.Thread(target=io_bound_function)

thread.start()

thread.join()

print(“Main thread continued”)

“`

CPU-Bound Function: Crunching Numbers

In contrast, consider a situation where you’re performing heavy calculations that keep the CPU occupied.

This is a classic CPU-bound scenario. Let’s explore how CPU-bound tasks behave using both multiprocessing vs multithreading .

Using Multiprocessing for CPU-Bound

“`python

import multiprocessing

import time

def cpu_bound_function(n):

result = 0

for _ in range(n):

result += sum([i * i for i in range(1000000)])

print(“CPU-bound function using multiprocessing finished”)

if __name__ == “__main__”:

process = multiprocessing.Process(target=cpu_bound_function, args=(4,))

process.start()

process.join()

print(“Main process continued”)

“`

Using Multithreading for CPU-Bound

“`python

import threading

import time

def cpu_bound_function(n):

result = 0

for _ in range(n):

result += sum([i * i for i in range(1000000)])

print(“CPU-bound function using multithreading finished”)

if __name__ == “__main__”:

thread = threading.Thread(target=cpu_bound_function, args=(4,))

thread.start()

thread.join()

print(“Main thread continued”)

“`

The Relevance to Python App Development

So, how is this entire multithreading and multiprocessing thing relevant with Python App Development?

Here’s how:

CPU-Bound Applications

In the realm of CPU-bound applications, where tasks involve intensive computational operations, multiprocessing emerges as a key player.

By harnessing the power of multiple CPU cores, multiprocessing enables efficient parallel execution, accelerating computation-intensive processes and reducing overall execution time.

I/O-Bound Applications

For applications primarily engaged in I/O operations, such as network requests, file operations, and database interactions, multithreading offers a compelling advantage.

By utilizing separate threads for I/O-bound tasks, you can ensure that waiting times are minimized, enhancing application responsiveness and user experience.

Choosing Between Multiprocessing vs multithreading

The decision between multiprocessing vs multithreading should be based on the nature of your tasks and the characteristics of your application.

For CPU-bound tasks that demand intensive computation, multiprocessing is preferable. On the other hand, for applications with I/O-bound operations that involve frequent waiting, multithreading shines.

When faced with the choice between multiprocessing vs multithreading , several factors should guide your decision-making process:

Task Characteristics

Consider the nature of the tasks your application performs. If your tasks involve heavy computation, multiprocessing might be the better choice.

For I/O-bound tasks, multithreading can deliver enhanced responsiveness.

GIL Considerations

The Global Interpreter Lock (GIL) significantly impacts multithreading’s ability to achieve true parallelism.

If your application is dominated by CPU-bound tasks that can benefit from utilizing multiple CPU cores, multiprocessing might be more suitable.

Resource Consumption

Evaluate the resource requirements of your application.

Multiprocessing generally consumes more memory due to separate memory spaces for processes.

Multithreading, with its shared memory space, can be more memory-efficient in certain scenarios.

Complexity and Compatibility

Consider the complexity of managing threads or processes, especially in scenarios involving shared resources and synchronization.

In addition to this, assess the compatibility of multiprocessing or multithreading with your existing libraries and frameworks.

Conclusion

In the intricate realm of Python app development, the choice between multiprocessing vs multithreading is a decision that significantly influences your application’s performance and efficiency.

The distinction between these techniques, their strengths, limitations, and optimal applications, all have implications for your development endeavors.

Now, if you want to learn in more detail about it, we highly recommended that you learn from the resources of top python development companies.

FAQ

Multiprocessing creates separate processes, achieving true parallelism, while multithreading involves threads sharing a process’s memory space.

GIL restricts true parallelism in multithreading, making it less effective for CPU-bound tasks. Multiprocessing bypasses the GIL for CPU-bound tasks.

Yes, it’s possible to combine them for specific scenarios. However, tread carefully, as managing parallelism from both techniques can be complex.

Thread pools and process pools maintain reusable workers, reducing overhead and optimizing resource management for improved efficiency.

Profiling tools identify performance bottlenecks, and monitoring tools track resource usage and potential issues, enabling optimization.

Multithreading is ideal for GUI applications as it ensures the interface remains responsive even during background operations.

Consider task characteristics, GIL impact, resource usage, and complexity. Opt for multiprocessing for CPU-bound tasks and multithreading for I/O-bound tasks.

Async programming, like `asyncio`, allows multitasking within a single thread for I/O-bound tasks. It’s different from multithreading but offers similar responsiveness.

Use profiling tools to analyze task execution times. Adjust granularity – the size of tasks – based on the analysis for optimal performance.

Load balancing ensures tasks are evenly distributed among threads or processes, optimizing resource utilization and improving efficiency.

Yes, algorithms following the divide-and-conquer principle can often be optimized for parallel execution to harness the power of multiple cores.

Niketan Sharma is the CTO of Nimble AppGenie, a prominent website and mobile app development company in the USA that is delivering excellence with a commitment to boosting business growth & maximizing customer satisfaction. He is a highly motivated individual who helps SMEs and startups grow in this dynamic market with the latest technology and innovation.

Table of Contents

No Comments

Comments are closed.